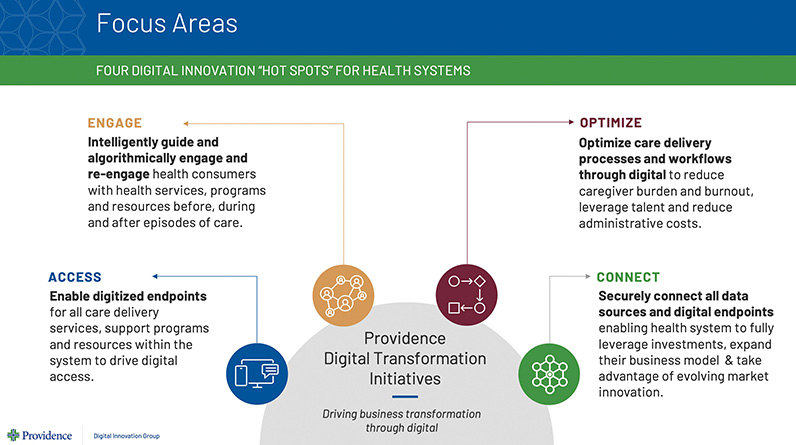

The Providence Digital Innovation Group says it works "to deliver more simple, accessible and equitable health care" and channels its efforts into various focus areas.

Sara Vaezy is in the problem-solving business. She and the Providence Digital Innovation Group she leads build technology to make it easier for people to get healthier and for clinicians to do their jobs better.

Vaezy is executive vice president and chief strategy and digital officer at Providence St. Joseph Health. She was a CHA Tomorrow's Leaders honoree in 2019.

The Providence Digital Innovation Group has incubated multiple companies for spin off. This October, it announced its fourth one, Praia Health, which adopts the digital flywheel concept used by Starbucks, Amazon, Netflix and others that captures various measures of customer use and employs algorithms based on that information to enhance their experience.

The Praia Health platform connects people to services, products and resources, such as scheduling, medical records, exercise and education programs, and virtual visits. For a patient who uses the platform to set up a doctor's appointment and then learns in that appointment that he or she has high cholesterol, the app might suggest simple workouts and options for lab appointments. The platform launched within Providence in January 2022 and supports more than 3 million user accounts. In 2022, it delivered more than $20 million in measurable value back to Providence, the system says.

The Providence Digital Innovation Group is in the process of rolling out the technology to a select group of health systems and other partners.

Vaezy's role also includes assuring a strategic approach to the promise of artificial intelligence, or AI, in health care. Catholic Health World talked to Vaezy about the widening use of digital technology in health care. Her responses have been edited for length and clarity.

What are you all working on right now?

We've been making investments that enable the use of artificial intelligence for over eight years, however, the technology itself is a lot more powerful now. It uses deep learning and neural

networks, which are essentially like the computer science equivalent of a human brain. About 10 years ago, the advancements in the technology resulted in the performance of artificial intelligence models in controlled settings to be greater than that

of a human. The example is one of these AI generative models passing the United States Medical Licensing Examinations.

Now, that doesn't mean that they can deliver care in a real-life setting, but it does mean that they can answer questions in a pretty robust way.

One of the things I get the most excited about is this notion of democratizing the usage of technology for the average health system user. Historically, if we wanted to do predictive scheduling, we would partner with some big vendor or our own data scientists. What we'll soon be able to do, because of the foundations we have in place plus these new technologies, is enable your average business user to actually build what we call applications, so that they can get their job done in a much more efficient, effective way. The power can multiply.

That's how we're seeing it from a foundational impact perspective. We bring together innovation and compassion. We are leaning in very heavily on this because it helps us deliver on our mission and bring innovation to delivering on our mission, and doing so in a way that will be sustainable into the future.

Where does the person and the compassion come in?

What we're able to do is take the stuff where that personal touch isn't needed and get it off the plate of the people that need to do the work and need to be there for their patients.

You've probably been to the doctor, and you might notice they sit there and chart on their computer. In some cases, they're not even looking at you because they must do the documentation. And by the way, they also take more documentation home with

them. They're doing things at nighttime in bed — they call it pajama time. And that's contributing to burnout, and it's contributing to a lot of difficulty for our clinicians.

One of the things that we can do now is deploy ambient technology that listens to that patient conversation in the setting of that visit, so the doctor, the clinician, or the nurse practitioner can sit face to face and have a conversation with the patient. It can reduce burnout. We're partnering with a company called Nuance, and they're kind of the leader in voice-related technologies, and they're spending a lot of time on this ambient space.

Another example is that during COVID, the number of messages patients sent to clinicians expanded significantly. We're tackling the in-basket problem in a couple of different ways. One is something we call message deflection, which is trying to help the patient before they even send a message. We have a chatbot that lives on our website and in our mobile app and in MyChart, and the chatbot will pop up and say, "How can I help you?"

Sometimes patients still need to send the clinician an email or an in-basket message. We have another technology that is like an in-basket assistant for our clinicians, so that it reads the message as it comes, and it can help summarize the intent and what needs to get done for the patient.

We're really leaning into assisting (clinicians) and augmenting (their work), so that we can enhance the quality of that compassionate experience.

Overall, how should people see these developments? Do we need to be careful?

I think AI has the potential to be the best thing that's happened to our patients and our providers in terms of a technology support for them. It has tremendous,

tremendous potential and is very exciting. At the same time, we need to be very careful. There are lots of land mines out there in terms of potential issues with bias. We actually have a guardrails group that's determining policies but also putting

in place technical infrastructure to help contain the risks. What we need to do is test in a way that is fairly contained with a lot of mitigation built in so that we can learn and start to uncover the unknown unknowns. Otherwise, we'll never get

started and then we'll be in deep trouble.

Are you going to have critics that say computers are taking away people's jobs?

I oversee marketing in our system, and here is what I would say: marketers who use AI will replace marketers who do not use AI. But that doesn't mean

that marketers are going away. Our work just evolves. It changes over time, and it has to respond to the signs of the times. We don't have telephone operators plugging telephone wires in like they used to back in the day either. We have cell towers,

a different set of infrastructure.

AI will be the creator of new types of work that are more value added to helping people. We shouldn't have people just cutting and pasting data from one form to another. That's something a computer can do. We have lots of valuable resources. Humans should be working on higher-order things. There's a whole workforce training element to all of this as well, and we have to be very mindful of it. But that doesn't mean we have to slow down in terms of trying to help people.